Research

Auditory neurodevelopment of preterm infants

At a time when the developing human brain typically receives intrauterine auditory stimulation, preterm infants are exposed to the potentially harsh acoustical environment of the neonatal intensive care unit (NICU). With today's medical care, preterm infants can be born nearly 4 months premature (around 23-24 weeks' gestation) and survive to adulthood. However, preterm infants are at higher risk than term-born infants for several auditory and cognitive disorders, including hearing loss, auditory neuropathy, auditory processing disorder, speech/language developmental delays, autism, and ADHD. Our research in this area focuses on the NICU acoustic environment and neonatal medical care to identify potentially noxious acoustic stimuli and/or ototoxic medications and treatment. We also utilize neuroimaging (MRI) and neurophysiological (auditory brainstem response) techniques to track auditory neural development in preterm infants. The ultimate goal of this research is to (1) develop interventions that will optimize auditory experience for preterm infants to (2) promote healthy auditory brain development, speech perception, and language acquisition during infancy and childhood. This research was recently featured by our institution and in the news.

The contribution of extended high frequencies to speech/voice perception

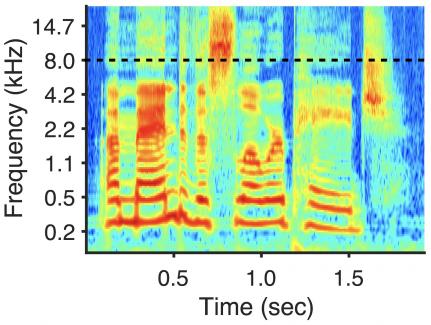

Humans have been endowed with an auditory system sensitive to a broad range of frequencies and a vocal apparatus that produces energy across that range of frequencies, suggesting these two systems have been tuned to each other over the species lifetime. However, speech/voice perception research and technology has, for various reasons, focused largely on the low-frequency portion of the speech spectrum. For example, your cell phone probably only transmits speech energy at frequencies below 4000 Hz, though human voices produce considerable energy at frequencies higher than that. HD voice (a.k.a. wideband audio) applications (e.g., Skype) and hearing aids are moving toward representing higher frequencies. Extended high-frequency energy (energy produced at frequencies >8 kHz) provides the auditory brain with useful information for speech and singing voice perception, including cues for speech source location, speech/voice quality, vocal timbre, and even speech intelligibility. These cues are likely utilized more readily by children, who typically have much more sensitive high-frequency hearing than adults. For example, school-aged children use high-frequency energy when learning new words, and show significant word-learning deficits when deprived of the high frequencies. Our research in this area aims to uncover the ecological value of extended high-frequency hearing and its utility in everyday speech/voice perception. Here are a few examples of what the high frequencies sound like when isolated. See if you can figure out if they are male or female and what they are speaking and singing. (You'll need a good set of high-fidelity headphones to hear the high frequencies well.)

Example 1 Example 2 Example 3 Example 4

The ultimate goal of this research is to improve communication technology (hearing aids, cochlear implants, mobile phones), especially for pediatric hearing loss patients. You can read a bit more about it in lay-language papers here and here. This research has been supported by the National Institute on Deafness and Communication Disorders (NIDCD), one of the National Institutes of Health (NIH).